Towards Analytics for Wholistic School Improvement: Hierarchical Process Modelling and Evidence Visualization

Ruth Deakin Crick

Systems Centre, Faculty of Engineering

University of Bristol, UK

Institute of Sustainable Futures, School of Education & Connected Intelligence Centre

University of Technology, Sydney, Australia

ruth.deakin-crick@bristol.ac.uk

Simon Knight

Connected Intelligence Centre

University of Technology Sydney, Australia

Steven Barr

Systems Centre, Faculty of Engineering

University of Bristol, UK

ABSTRACT. Central to the mission of most educational institutions is the task of preparing the next generation of citizens to contribute to society. Schools, colleges, and universities value a range of outcomes — e.g., problem solving, creativity, collaboration, citizenship, service to community — as well as academic outcomes in traditional subjects. Often referred to as “wider outcomes,” these are hard to quantify. While new kinds of monitoring technologies and public datasets expand the possibilities for quantifying these indices, we need ways to bring that data together to support sense-making and decision-making. Taking a systems perspective, the hierarchical process modelling (HPM) approach and the “Perimeta” visual analytic provides a dashboard that informs leadership decision-making with heterogeneous, often incomplete evidence. We report a prototype of Perimeta modelling from education, aggregating wider outcomes data across a network of schools, and calculating their cumulative contribution to key performance indicators, using the visual analytic of the Italian flag to make explicit not only the supporting evidence, but also the challenging evidence, as well as areas of uncertainty. We discuss the nature of the modelling decisions and implicit values involved in quantifying these kinds of educational outcomes.

Keywords: Learning analytics, academic analytics, leadership, decision support, complex systems, educational values, visualization, dashboard, uncertainty, risk, surveys

ISSN 1929-7750 (online). The Journal of Learning Analytics works under a Creative Commons License, Attribution - NonCommercial-NoDerivs 3.0 Unported (CC BY-NC-ND 3.0)

(2017). Towards analytics for wholistic school improvement: Hierarchical process modelling and evidence visualisation. Journal of Learning Analytics, 4(2), 160–188. http://dx.doi.org/10.18608/jla.2017.42.13

1 INTRODUCTION TO THE CHALLENGE

The heralded growth of data-intensive, computational analytics tools, techniques, and platforms holds forth the promise of new ways for educational organizations to analyze their critical processes, track student progress, make and evaluate interventions in response to rapid feedback, and monitor impact and evaluate outcomes. There is real potential now for schools to measure, evaluate, and improve a range of key processes and outcomes by capturing complex data in a form that is practical and accessible for users and can inform next-best-action decisions for leaders, teachers, and indeed students in “real time.” Parallel to this growth in technology and analytics, thirty years of school effectiveness research has seen a shift of focus from evaluating schools as whole units, to focusing on what is happening in individual classrooms. However as MacBeath and McGlynn (2002) argue this shift needs to be complemented by a wider focus on school culture and school self-evaluation models that put student learning at the centre, but are set in the context of a school culture that sustains 1) teacher and staff learning, 2) leadership that creates and maintains the culture, and 3) an outward-facing dimension involving learning in the home and community.

Formal and informal assessment and evaluation are at the heart of both learning and leadership. Identifying, collecting, and analyzing relevant information or data in order to form judgements about what needs to be learned, how to progress pedagogically, and whether an outcome has been achieved is core business for teachers and students as well as leaders (Ritchie & Deakin Crick, 2007). However, there is a tendency in education systems to measure those things that are easiest to measure and are most accessible both for traditional measurement and for political accountability frameworks, which by definition reinforce a particular embedded worldview valorizing a narrow set of academic outcomes and standardized performance measures. As MacBeath and McGlynn (2002) went on to argue:

In deciding what to evaluate there is an irresistible temptation to measure what is easiest and most accessible to measurement. Measurement of pupil attainment is unambiguously concrete and appealing because over a century and more we have honed the instruments for assessing attainment (and used them) for monitoring and comparing teacher effectiveness. (p. 7)

2 THE PURPOSE OF THIS PAPER

In this paper, we report on a proof of concept case study with a set of English academies in a multiacademy trust that used the affordances of technology, complex systems modelling, and school selfevaluation in order to examine this tension by exploring a way to 1) create a model of their school as a complex system with a particular shared purpose including, but not limited to, standardized national curriculum test scores, 2) identify the data they believed would give them rich evidence about how well they were achieving that purpose, and 3) populate a leadership decision-making dashboard called Perimeta (Performance Through Intelligent Management) developed in the Engineering Faculty’s Systems Centre at the University of Bristol as a practical tool for supporting complex decision making, originally in the oil industry. This is a novel use of learning analytics for evaluating school performance that attempts to do justice to schools as complex, contextualized, living systems. It draws on a range of data types, representing a wholistic set of student outcomes, and uses computational analytics to analyze the resulting, bespoke data set in a way that accounts for what is uncertain, as well as what contributes positively or negatively to achieving the defined school purpose. The purpose of the study was to explore what might be possible and useful as a foundation for further research, development, and practice.

3 LEADERSHIP THAT SUPPORTS WIDER STUDENT OUTCOMES

The term “wider outcomes” should be clarified in two ways. First, “wider outcomes” might be taken to draw meaning only in relation to something else “narrower” (namely, grades) rather than standing in its own right. Nevertheless, for historical reasons this is the term, derived from the “data desert” era, we have compared to the “data ocean” in which education increasingly finds itself (Behrens & DiCerbo, 2014). Second, there is a connotation that these “wider outcomes” are secondary outcomes when in fact the growing body of evidence points to the vital roles that these variables play in creating sustainable, deep learning.

This is a significant challenge for learning analytics in 21st century schools — one that governments around the world are intensifying by identifying and promoting sets of “competences” for citizens that schools are required to “deliver” (e.g., Delors, 2000; European Commission, 2007; OECD, 2001; Rychen & Salagnik, 2000) but that, arguably, schools developed in the 19th and 20th centuries are ill-equipped for. Each government’s list varies but common candidates for inclusion are “learning to learn,” “problem solving,” “relational capabilities,” “active citizenship,” and “entrepreneurial skills.” These types of “competences” or “capabilities” require students to develop not only analytical capabilities, but also hermeneutical and emancipatory capabilities, which bring new challenges for assessment and evaluation, and thus the data forms and feedback practices of educators (Deakin Crick, 2017; Deakin Crick, Barr, Green, & Pedder, 2015; Joldersma & Deakin Crick, 2010). Attending to developing pedagogies in schools that actually lead to these types of student outcomes is challenging because it requires a significant shift in curriculum and assessment practices towards a focus on the processes of learning as well as the outcomes. In short, a reductionist focus on a single outcome measure will not suffice. This needs to be reflected in the ways in which school leaders use data — and business intelligence — to achieve their purposes (Goldspink, 2015; Goldspink & Foster, 2013; Harlen & Deakin Crick, 2003).

In recent years, progress has been made towards establishing a set of empirically grounded, holistic frameworks with some potential for assembly into an integrated set of measures that could provide evidence about these critical relationships and guide more effective policy intervention. The Consortium on Chicago School Research (CSSR) (Bryk, Sebring, Allensworth, Luppescu, & Easton, 2010) developed a theoretical and empirical framework that is holistic, participatory, and based on the understanding that “schools are complex organisations consisting of multiple interacting sub-systems. Each subsystem involves a mix of human and social factors that shape the activities that occur and the meaning that individuals attribute to these events. These social interactions are bounded by various rules, roles and prevailing practices that, in combination with technical resources, constitute schools as formal organisations. In a simple sense, almost everything interacts with everything else” (p. 45). Bryk et al. went on to identify five essential school supports (agents, processes, and structures) characteristic of improving schools, as measured by deep student engagement in the processes of learning and achievement, including standardized outcome measures. Each of these supports, stimulated by leadership, focus on dynamic processes of change and learning. They provide an explanation of how the organizational and relational dynamics of a school, including parents and community, interact with work inside its classrooms to advance student learning.

Several studies, have concluded that the most successful systems, based on measures of student engagement and attainment, prioritize staff motivation and commitment, teaching and learning practices, and developing teachers’ capacities for leadership (West-Burnham, 2005; Gunter, 2001; Bottery, 2004). Darling-Hammond and Bransford (2005) have established the importance of effective teaching for supporting enhanced student engagement and achievement; there is now much evidence behind the claim that leadership focusing on the quality of teaching is crucial for maintaining and supporting improvement in the quality of learning in schools (Robinson, Lloyd, & Rowe, 2009). At the heart of leadership for learning (e.g., MacBeath & Cheng, 2008) is the concern of enabling schools to become learning organizations that support teacher and student learning and reach out meaningfully to the communities they serve. As Silins and Mulford (2004) found in their comprehensive study of leadership effects on student and organizational learning, student outcomes are more likely to improve when leadership is distributed throughout the school community and when teachers are empowered in their spheres of interest and expertise. The McKinsey Report (2007) derived from an international survey of the most successful education systems, found that a focus on teacher recruitment and professional learning were more important as determinants of success in terms of student learning and attainment than funding, social background of students, regularity of external inspection, or class sizes. In Bryk et al.’s (2010) research, the most effective school leaders were catalytic agents for systemic improvement, synchronously and tenaciously focusing on new relationships with parents and community; building teachers’ professional capacity; creating a student-centred learning environment; and providing guidance about pedagogy and supports for teaching and learning. Despite the importance of these features to learning in schools, Goldspink’s research identifies that the leadership qualities required for this level of complexity are not among the typical selection criteria for school principals (Goldspink, 2015; Goldspink & Foster, 2013). These qualities include modesty, circumspection, and a capacity to question one’s own deepest assumptions while inviting others to participate in critical enquiry. These personal qualities — and the assumptions about leadership as a core systems process that underpin them — have not been widely adopted in education and few school leaders are familiar with the relevant investigative, dispositional, and analytical processes (Zohar, 1997).

Significant bodies of research underpin the need for schools to focus on wider outcomes, such as student experience (Blackwell, Trzesniewski, & Dweck, 2007; Dweck, 2000); learning how to learn and resilient agency (Claxton, 2008; Deakin Crick, Huang, Ahmed Shafi, & Goldspink, 2015); well-being and complex problem solving skills for successful living in the complex and inter-related world of the 21st century (McCombs & Miller, 2008; McCombs, Daniels, & Perry, 2008; Thomas & Seely Brown, 2009, 2011).

Indeed, the “Learning How to Learn” project in the UK, with over 1000 teachers and 4000 pupils across 40 schools, indicated that promoting learning “autonomy” was a key goal, enabling learners to reflect upon and understand their own learning processes and develop ways of regulating them. Teachers who successfully promoted learning how to learn in classroom lessons reported positive learning orientations and applied learning principles to shape and help regulate the learning processes of pupils throughout lessons rather than at specific points. Organizational structures and cultures geared towards leading learning, promoting enquiry, and networking and auditing expertise were significant conditions, providing opportunities for teachers and pupils to learn how to promote learning autonomy (James et al., 2007; Opfer & Pedder, 2011; Pedder, 2006, 2007; Pedder, James, & MacBeath, 2005).

4 A COMPLEX SYSTEMS APPROACH, WORLDVIEWS, AND ANALYTICS

We have previously drawn attention to the pedagogical and epistemological worldviews unavoidably embedded in how educational technologies are designed and deployed, drawing attention to some of the well-established qualities of deeper learning, such as learner disposition and identity (Buckingham Shum & Deakin Crick, 2012), and the sociocultural dynamics of discourse (Knight, Buckingham Shum, & Littleton 2014) that are part of those “wider student outcomes.” We have considered the implications for the learning analytics of pedagogy that takes deep learning seriously since the behavioural patterns associated with such learning processes are less easily quantified and captured than the simpler proxies that dominate learning analytics at present. This proof-of-concept study is located within this challenge — using data and technology to support and enhance leadership decision making that facilitates deep learning and social change at all levels (leaders, teachers, students, parents/carers) in addition to the more familiar use of technology and data to measure and report on traditional assessment outcomes.

A worldview that accounts for the system as a whole is a key to learning analytics aimed at addressing this challenge (Bryk, Gomez, Grunow, & LeMahieu, 2015; Bryk, Gomez, & Grunow, 2011; Bryk et al., 2010). Research and practice from systems thinking in health and industry (Checkland, 1999; Checkland & Scholes, 1999; Snowden & Boone, 2007; Sillitto H, 2015) demonstrates that the rigorous analysis of the whole system leads to the identification of locally owned improvement aims and thus a shared purpose. The boundaries of the system are aligned around its purpose rather than being determined by external regulatory frameworks (Blockley, 2010; Blockley & Godfrey, 2000; Cui & Blockley, 1990). Thus an alignment around purpose at all levels in a system (in this case leaders, teachers, students, parents/carers) will both require and enable data to be owned at all levels by those responsible for making the change and using data for actionable insights. Rather than the data being the province of system leaders or even researchers, it is owned and used for self-directed change by all stakeholders. The power, or the authorship, of decision-making is both inclusive and participatory. In such a systems approach, the learning processes of stakeholders at all levels are critical. Technical systems and analytics need to reflect this systems approach and the worldview in which it is embedded and provide appropriate feedback at each level.

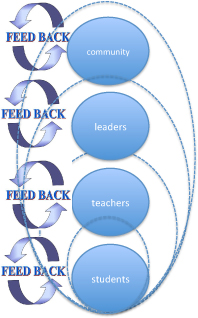

This systemic approach draws the boundaries of the learning environment more broadly, focusing on the core processes of learning and feedback at different levels of the system: leaders, teachers and students, and parents/carers in the wider community (Figure 1). Data about learning needs to be captured at each level, since each influences the others; this data needs to be captured, modelled, and represented in ways that enable actionable insights to support the community’s transformative efforts. Such “live” data provides a more holistic picture of the health of the learning system. Yeager, Bryk, Muhich, Hausman, and Morales (2013) describe these as “practical measures” for practitioners and researchers engaged in improvement research, which are necessary for educators to assess whether their teaching and learning strategies in the classroom are actually leading to the changes they hope and plan for, in real time, well before the students become “academic casualties.” Although traditional outcome data (such as retention, and attainment) are important, relying on these measures for improvement is analogous to “standing at the end of the production process and counting the number of broken widgets” (p. 39).

Figure 1: Learning and feedback at multi levels in schools as complex systems.

5 LOCATING THE CHALLENGE IN COMPLEX SYSTEMS

In this paper, we aim to demonstrate the potential for quantifying, modelling, and visualizing some of these variables in order to provide insight and decision-support for the leaders charged with monitoring the health of schools, but who currently lack aggregate views of progress tuned to locally determined purposes. Central to this approach is a complex systems perspective on organizational improvement that draws attention to the inevitability of uncertainty in the evidence available for decision-making and the need for analytics to provide actionable insights at multiple levels in the system as part of an ongoing approach to improvement. There is a growing body of evidence that such approaches hold promise for educational improvement as well as in other domains. The work of the Carnegie Foundation in particular has made a substantial contribution to this field. Leadership decision-making on the basis of meaningful data that provides actionable insights integrates organizational improvement and the professional learning of key stakeholders, since learning, especially professional learning, is the process through which an individual or team adapts to and regulates the ubiquitous flow of relevant information and data over time in the service of a purpose of value. In schools as complex systems, the flow of data is rapid and multi-faceted.

Thus the use of computational learning analytics to capture and represent complex data in context at meaningful levels of aggregation and abstraction is a promising field of enquiry. We argue that it may support a paradigm shift from organizations that respond re-actively to external regulations to proactive self-directed organizations capable of defining, measuring, and improving their own purposes and responding professionally to external regulatory frameworks. Thus, we expand the boundaries of the system in which we consider learners to be embedded and we extend the focus of learners to include all stakeholders in the system — students, teachers, leaders, and parents/carers. Hence we expand the set of variables considered as candidates for data aggregation and learning analytics in educational organizations as multi-level, complex systems. Work on the utility and relevance of a systems approach to education and learning analytics has been started already (Ferguson et al., 2014; Macfadyen, Dawson, Abelardo, & Gašević, 2014) and this study can be located within this promising strand of research.

Analytics for educational leaders have been referred to as academic and action analytics, to differentiate them from learning analytics designed for educators and learners (Ferguson, 2012; Piety, Hickey, & Bishop, 2014; Daniel, 2015). Academic analytics are described by Campbell, DeBlois, and Oblinger (2007) as “an engine to make decisions or guide actions. That engine consists of five steps: capture, report, predict, act, and refine.” They note that “administrative units, such as admissions and fund raising, remain the most common users of analytics in higher education today.” Action analytics is a related term, proposed by Norris, Baer, and Offerman (2009), to emphasize the need for benchmarking both within and across institutions, with particular emphasis on the development of practices that make the institutions effective.

The work reported here could be framed as academic analytics, but with a specific interest in quantifying wholistic educational outcomes that extend beyond those that have served as the focus of academic/action analytics discourse to date. However it could also be framed as professional learning analytics, since the same data points can be used for either formative feedback, which enables prospective action for improvement, or summative feedback, which retrospectively allows for judgement against a benchmark. Both formative and summative assessment and evaluation are important throughout the lifecycle of a self-evaluating school system in order for its leaders to form judgements about how well a process is being developed and to adapt and improve it.

Our fundamental questions are thus the following: If we acknowledge the importance of student attainment, but also have the ambition to educate our students for a set of wider outcomes, how can we know how well we are doing throughout the process, what we might need to do to improve, and how will we know when we have achieved our goals? How can we do this in systematic, sustainable, and convincing ways, and render these coherently as part of a richer vision of academic analytics for leaders, teachers, and students? The analytics challenge is to find ways to monitor the values and performance qualities deemed central to one’s organizational mission, which are harder to measure than the more common, readily quantified indicators such as demographics, call centre logs, online logins, grades, graduations, and so forth. As with any assessment regime or learning analytics deployment, the question is whether we can take advantage of an analytics infrastructure to quantify, monitor, and optimize these processes, without, at the same time, undermining them though oversimplification, reductionism, or inappropriate interventions.

6 HIERARCHICAL PROCESS MODELLING

Hierarchical process modelling (HPM) is a knowledge structuring process emerging from a systems thinking approach to address practical problems in complex systems by generating a visual representation (or architecture) of the system (Davis & Fletcher, 2000; Davis, MacDonald, & White, 2010). There are different approaches to modelling complex systems; for example, causal loop modelling looks at the interactions between the parts of the system (Jackson, 2000; White, 2006), or Beer’s viable systems model, which models different levels of a system (1985; 1984). However, the ECHO project team selected HPM (Davis, MacDonald, & White 2010) because it is framed around the system’s purpose and thus has particular application to leadership decision making in the context of high-stakes accountability frameworks. In particular, it offers a practical way of coming to grips with complexity and a forum for stakeholder engagement and participation in 1) defining and evaluating their particular purpose and in 2) ongoing leadership decision making based on a visual representation of data derived from critical core processes in the system.

The Perimeta software (Performance Through Intelligent Management), developed in the Engineering Faculty’s Systems Centre at University of Bristol as a research tool for systems thinking, provides a visual modelling interface to build a hierarchical process model with interactive branches that can be expanded as required to different levels of granularity, and an underlying algorithm (detailed next) that accounts for uncertainty as well as for what is known — positive and negative — about performance.

Perimeta is a form of knowledge cartography (Selvin & Buckingham Shum, 2014) that provides emergent visualizations that feed back to a group the state of their collective understanding of their system at any particular point in time and at different levels of granularity. For instance, one could explore student attainment for the whole community or for an individual student, or it could explore the levels of selfreported student engagement in learning by individual, by group, or by whole cohort. Thus, it should enable leaders to “drill down” into the data to explore what is or isn’t happening, or what is known and what is unknown at several levels of the system — and, crucially, to provide a heuristic for leadership decision making. By modelling what is “unknown” about the system’s achievement of its core purpose, it encourages organizational learning. What is unknown but considered critical for success is a “site” of authentic enquiry for the system leaders. The evidence from other domains is documented elsewhere (Davis, MacDonald, & White 2010). The pilot reported here presents the first evidence of its utility in an educational context.

Hierarchical process modelling with the Perimeta software tool has three important characteristics:

- Aggregation of heterogeneous forms of evidence ranging in formality and source (permitting diverse stakeholder voices), e.g., numerical, formal prediction, opinion, narrative

- Modelling of supporting and challenging evidence, and uncertainty (absence of data)

- Visualization using an “Italian Flag” scheme (explained later)

Hierarchical process modelling involves an analysis by stakeholders of key processes structured into a hierarchy showing how they drive the higher-level shared purpose of the organization. In schools, for example, student engagement in learning may be identified as a prerequisite for the development of higher-order thinking, and both of these may be understood as essential contributions to the overall outcome of student attainment. The next step is in the identification of the salient parameters for a measurement model for these key processes and the collection of relevant data that will be useful in judging how well they are working and how they may be improved. That data may take many forms — quantitative, qualitative, or narrative. Sillitto (2015) refers to this process as architecting systems and summarizes it thus:

First, understand the situation. Then diagnose what’s wrong with it and what you want to improve. Then understand and align all the stakeholder purposes, interests and concerns. Agree “what good looks like” and establish a shared vision and measures of effectiveness for a solution. Then define the solution, the relationship between the parts and the purpose, and the checks required to ensure the purpose is achieved in a changing world. (p. xvii)

7 CASE STUDY METHODOLOGY

The methodology for the project proceeded through the following phases: 1) identifying the community owned representation of purpose; 2) building the HPM; 3) defining the measurement model and data points and collecting data; 4) entering and analyzing data in the Perimeta model; 5) testing the outcomes through feedback to users and “use case” examples. These are addressed in the following sections, with an extended focus on the challenges of entering and analyzing data in the Perimeta model.

8 CASE STUDY CONTEXT

In the context we will describe in this study, a multi-academy trust had formulated a shared stakeholder derived charter about the values, vision, and purpose that describe their expectations about student experiences and achievements. From the outset, this charter committed the trust to evaluate less easily measurable factors, such as the development of students as resilient, confident, and caring lifelong learners; the development and care of teachers that enable them to flourish professionally; and the meaningful involvement of parents and carers in student learning. They believed that to achieve their vision, teachers and leaders need to model such learning by adapting and responding to students in context. The “Evaluating Charter Outcomes” (ECHO) project was the vehicle for this proof of concept case study, focusing on one section of the charter — transforming learning, which, the trust hypothesized, would impact on the other two sections of the charter: transforming lives and transforming communities. The project worked with three secondary co-educational academies located in areas of disadvantage in the south of England, each with approximately 1100 students and 60 staff.

The challenge was to identify a “systems architecture” (Sillitto, 2015) that would provide a visual representation of the particular driving purpose of this multi-academy trust, followed by a measurement model that would guide the process of data capture, analysis, and feedback for improvement purposes. A “systems architecture sets out what the parts of the system are, what they do and how they fit and work together” (Sillitto, 2015, p. 4) and how they contribute to achieving the system’s purpose. It is a way of modelling a system (in this case a group of schools) that is complex — uncertain, unclear, incomplete, and unpredictable — in contrast to a system that is complicated but predictable (Snowden & Boone, 2007). Because schools operate in unique contexts, there can be no single blueprint for success; what works in one simply may not work in another. Schools are self-organizing, purposeful, layered, interdependent, and operate “far from equilibrium” (Checkland & Scholes, 1999; Davis & Sumara, 2006; Bower, 2004) and thus a reductionist focus on the measurement and improvement of a single standardized variable (for example, test results) distorts both the process and outcomes of the system (Assessment Reform Group, 1999, 2002; James & Gipps, 1998; Reay, 1999; Harlen & Deakin Crick, 2003). As Mason (2008) argues,

trying to isolate and quantify the salience of any particular factor is not only impossible, but also wrongheaded. Isolate, even hypothetically, any one factor and not only is the whole complex web of connections among the constituent factors altered — so is the influence of (probably) every other factor too. (p. 4)

8.1 Identifying the Community

The case study project took as its starting point the academy charter, which had been produced during the three years prior to the project by the multi-academy trust. It was a process of significant consultation with all stakeholders about what is distinctive about their educational philosophy and practice and the espoused values that underpin them. This charter already reflected a complex approach to schooling: the overall purpose of the charter was “Establishing and sustaining a group of high achieving learning communities that enables everyone to achieve their potential and refuses to put limits on achievement.” This would be achieved by three key processes of “transforming lives,” “transforming learning,” and “transforming communities.” The existing charter provided the foundation for the hierarchical process model, providing a rationale for the target constructs and thus data variables to be included in the model, against which measurements (described in Section 4) were identified. It incorporated the multi-academy trust’s commitment to transforming learning for students, teachers, leaders, and parents/carers and this provided the four “levels” at which these processes should be operating and evaluated. The charter thus provided the key data points to be modelled and measured in this project — a report on the genesis of the three-year consultation that resulted in these variables is beyond the scope of this paper.

8.2 Building the Hierarchical Process Model

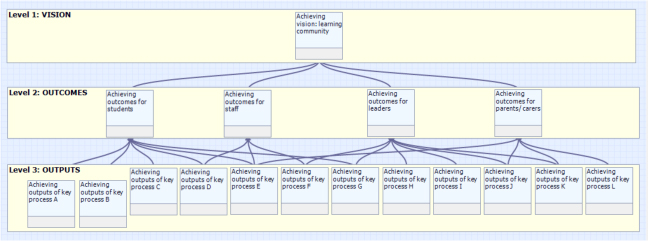

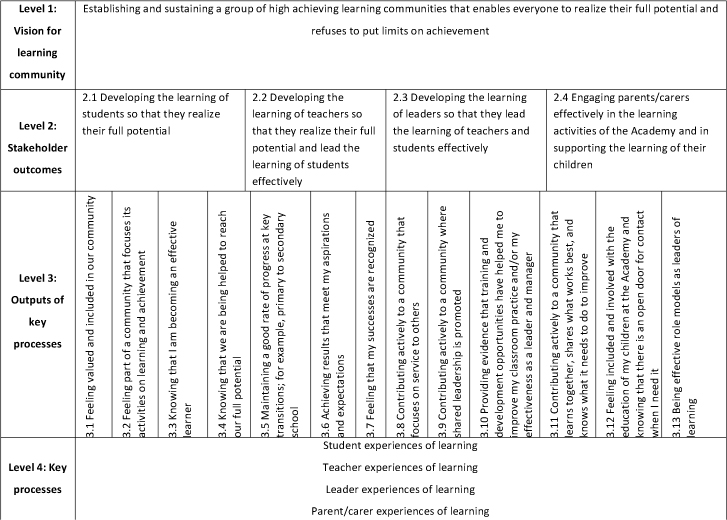

The charter purpose statement, focused on transforming learning, was adopted as level 1 in the hierarchical process model (see Figure 2 for a general model and Figure 3 for the detailed model in this case study). The level 1 “vision” was then decomposed into sub-process “outcomes” at level 2. Together these were considered by the improvement team to be necessary for achieving the overall purpose of level 1. A further level of decomposition resulted in a set of outputs at level 3, which were specific enough to be measurable and represented evidence of the processes of level 2, and would thus drive improvement towards the overall vision described by level 1. The model can also be called a “driver diagram” because it demonstrates the factors or processes that “drive” towards the achievement of a particular purpose (Bryk et al., 2015). The three levels constitute the hierarchical process model for this case study, described in detail in Figure 3, to which a fourth level is added describing the key processes (or activities) that result in the outputs described by level 2.

Figure 2: A simple diagram of a generic hierarchical process model.

Figure 3: The hierarchical process model developed for the ECHO project.

8.3 Defining the Measurement Model

Having constructed the hierarchical process model for the ECHO project schools, the next step was to identify data that could be collected from stakeholders and mapped onto the level 3 outputs to provide evidence about progress against each output. The following methods and instruments were identified to collect data for one or more of these level 3 outputs.

- A research validated dispositional survey instrument that measures student “learning dispositions” and orientation to learning (Buckingham Shum & Deakin Crick, 2012; Deakin Crick, Huang, Shafi, & Goldspink, 2015) (outcome 2.1)

- A validated teacher professional learning instrument that measures how engaged in learning teachers are in their practice (Pedder, 2007) (outcome 2.2)

- Bespoke surveys with questions to elicit evidence about some of the specific outcomes of the charter, particularly in relation to student satisfaction, opportunities for leadership, and quality of relationships (outcomes 2.1–4)

- Semi-structured interviews and narrative interviews with teachers, students, and leaders who focused on their identification of stories of significant change (outcomes 2.1–4)

- Standard key performance indicators (KPIs), including test grades, attendance, behaviour, and demographics, including socio-economic status (outcomes 2.1–4).

Evidence was gathered via these instruments for input into the Perimeta software using two student questionnaires, one teacher and leader questionnaire, 20 semi-structured interviews with teachers, and 20 narrative interviews with students, and by collecting extant data held by the multi-academy trust on standardized attainment scores in English Maths and Science and on student behaviour. This took place over a period of one school year. The instruments included research-validated survey tools as well as specifically designed questionnaires about the charter and standardized key performance indicators (KPIs). The data provided by responses to each question or scale from the questionnaire data provided evidence that was mapped onto one of the level 3 output statements in the model. In some cases, the raw score for each question was used, but where there were research-validated scales, these were used as a single score representing the latent variables validated by previous research. The narrative interviews were conducted by senior teachers in each school according to a protocol, with 10% of randomly selected students from one year group. These and the semi-structured interviews were recorded and transcribed, and then analyzed and moderated independently by two researchers for “stories of significant change,” rated on a scale of 1–4, where 1 referred to very little evidence of significant change in student attitude and approach to learning through to 4, showing significant evidence of change in student attitude and approach to learning. These were based on a textual analysis of the qualities of student reflections on their learning experiences and identified evidence of actual behavioural or attitudinal change towards more effective and meaningful learning outcomes, for example by looking at evidence of student ownership of learning, and their account of what they did differently as a result. Performance data in the form of standardized assessment data in English, Maths and Science, and attendance data and behaviour concern data were also collected from the schools’ extant systems.

8.4 Entering and Analyzing Data

The data were collated by each school, passed on to the team and entered manually into the Perimeta model, which was housed on a local device. Had this process been automated (as is now possible), it would have made the Perimeta tool useful in real time. However, the purpose was to test the proof of concept so this arduous method was acceptable. The data collected was diverse, ranging from a rating scale of 1–4 based on moderated narrative analysis of texts, to latent variable scores on self-report questionnaires, individual item scores, and performance data.

The Perimeta software was designed to model complexity by accounting for uncertainty and incompleteness in the data, constituting the evidence available for decision making. The following section identifies three key challenges at the heart of this proof of concept study. First, how to model data to cater for uncertainty by drawing on the concepts of “confidence” and “performance” and translating traditional data into formats that model this. Second, how to model the interdependencies between the different processes and levels in the model — approximating “real life” as closely as possible. Third, how to estimate the system’s performance overall, with all its uncertainty, and an explanation of the algorithm that does this.

8.4.1 Data Challenges: Modelling Uncertainty

Standard reporting of questionnaire data frequently uses a mean score and a standard deviation around the mean. The degree of variation around the mean indicates a level of uncertainty in the data. In contextualized and complex situations, this variation is of great interest precisely because it reflects a degree of “fuzziness” or uncertainty. According to the size of the sample and other factors, some judgements can be made at scale across a meta system from mean scores, but this does not provide information about the contextualized differences within particular settings. From an improvement perspective, it is the variation around the mean that matters (Bryk et al., 2015). The use of Likert scales for questionnaire responses increases this uncertainty in the evidence since the responses vary from “strongly disagree” to “strongly agree.” With any data the degree of certainty upon which we can base decisions will vary and thus the degree of confidence we can have in the data will vary. A number of further considerations are relevant; for example, the respondent’s understanding of the question and experience to answer it. The respondent may be biased or in some other way misled.

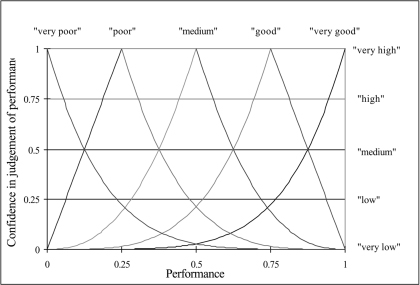

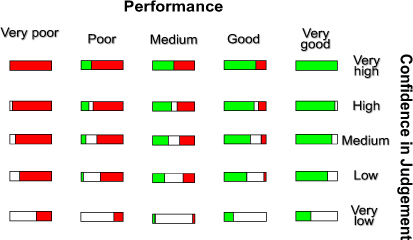

In an attempt to be more explicit about what is uncertain in such data, Hall, Le Masurier, Baker-Langman, Davis, and Taylor (2004) distinguish between the performance indicated by a measure and the confidence that we can have in that measure. They describe a technique for mapping from linguistic descriptions of “performance” and “confidence in judgment of performance” to interval values of performance compatible with Italian flag figures of merit — a simple visual red, white, and green representation of data that indicates what we know with confidence is good for our purpose (green), what we know with confidence is bad for our purpose (red), and what we are uncertain about (white).

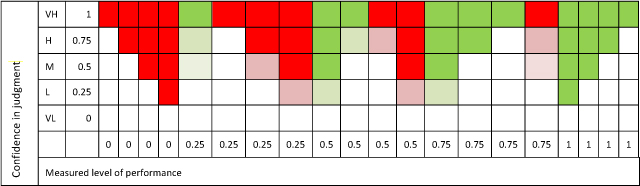

Figure 4 reproduces and adapts their illustration of an example of such mapping and presents an interpretation of Hall et al.’s (2004) mapping for a set of 25 discrete combinations of performance and confidence scales. The performance scale is based on a five-point Likert scale — from “very poor” to “very good” — corresponding to scores from 1 to 4 recorded by respondents to ECHO project questionnaires. The questionnaire output value was visually mapped onto the “Hall” mapping chart in Figure 4.

Figure 4: Mapping from linguistic descriptions of “performance” and “confidence in judgment of performance” to interval values of performance (after Hall et al., 2004).

Process performance functions were created by combining the Likert conversion of Figure 4 with the process performance measurement scales of Figure 5. At the input level, direct evidence of respondents’ raw scores (from 1 to 4) for each question were converted into Italian flag figures of merit where the best possible (100%) performance was full green (respondent strongly agrees, and very high confidence by the researcher in the respondent) and the worst possible performance was mostly red (respondent strongly disagrees, but very low confidence in the respondent). For output processes (collated by question, by scale, by gender, by academy and overall) the definitions of best and worst performance and all points in between were judged on a similar scale.

Figure 5: Interpretation of Hall et al. (2004).

8.4.2 Modelling the significance of inter-process relationships

The relationship between processes in the model also has an impact on performance, both hierarchically and laterally. The significance of pairwise relationships or links between “parent” and “child” processes in the hierarchical process model is calculated using three detailed attributes of sufficiency, necessity, and dependency defined by Davis and Fletcher (2000):

- The sufficiency or relevance of the evidence to its parent process is judged as a single number in the [0,1] range

- A sub-process is a necessity if the parent process cannot succeed without it; consequently, in the event of failure of the sub-process, the parent process fails

- Dependency is the degree of overlap between sub-processes and describes the degree of commonality in the sources of evidence

The significance of pairwise relationships or links between processes defining “causes” and “effects” was modelled using these three detailed attributes of sufficiency, necessity and dependency. Based on experience in modelling “many to one” performance relationships, and experience in school culture, the values indicated in Table 1 were assigned.

8.4.3 Estimating system performance

Full system models of the features of each of the data streams were developed in the Perimeta toolkit, combining the following features defined above:

- A hierarchy linking the responses to questionnaire statements (input processes) in turn to output processes representing the performance by question, by participating academies, by respondent gender (where given) and overall

- Responses to questions using Likert ratings from 1 (“poor” or “strongly disagree”) to 4 (“very good” or “strongly agree”)

- Process performance functions using linguistic measures related to Likert rating scales

- Sufficiency, necessity and dependency ratings for each cause and effect relationship

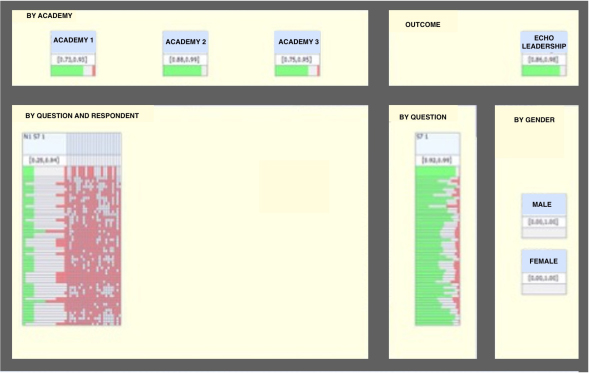

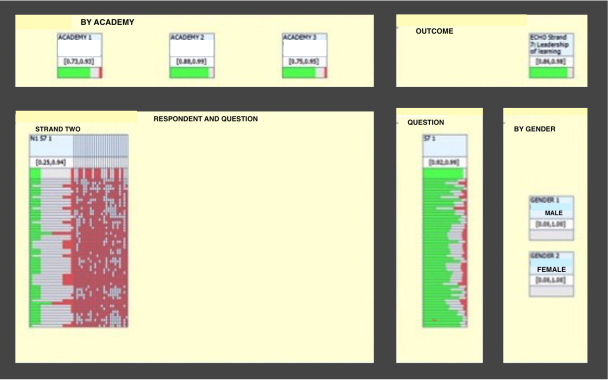

The Perimeta models used the “Juniper” algorithm to propagate the evidence and provide estimates of output performance by question, by gender, by academy and overall. A discussion of the details of the “Juniper” algorithm is beyond the scope of this paper but is reported elsewhere (Hall, Blockley, & Davis, 1998). The Perimeta models were each configured to produce a dashboard summary view as well as tabulated results for each question, each academy, each gender and overall. A sample datasheet from a Perimeta model is reproduced in Figure 6, below. The interpretation of the Italian flag visualization of data is presented in Figure 7, below.

Figure 6: Example data sheet showing data for strand 2, males only.

Figure 7: Interpreting the Italian flag.

8.4.4 Perimeta’s underlying model

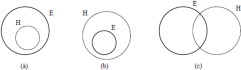

Davis and Fletcher (2000) have detailed the process of propagating evidence through a HPM hierarchy, which we summarize here. The method of propagation of evidence is based on the “interval probability theory” of Cui and Blockley (1990). Interval probability theory is a development of earlier interval models by Dempster (1969) and Shafer (1976). Consider the conjecture, H: that a process is going to be successful. In the ideal situation the dependability of a process to deliver its objectives is certain. However, in reality the certainty depends on the dependability of its sub-processes, about which we need evidence. Suppose also that there is evidence from a sub-process, E. We are concerned with the probability P(H) that H will be successful. The situation is illustrated using the Venn diagrams in Figure 8.

Figure 8: Venn diagrams of the logical conditions (a) E necessary for H, (b) E sufficient for E, and (c) E relevant to H respectively.

Interestingly, the last condition (c) is the most general and can be considered a generalization of (b) since the evidence is now only partially sufficient. The computation of the contribution of the evidence E to the conjecture H has been implemented in the method of Hall et al. (1998), considering only the accumulation of evidence from the sub-processes adding to the knowledge about H.

P(H) = P(H\E).P(E)

It is necessary to look at what happens when two or more pieces of evidence from two or more subprocesses are accumulated. It can be seen from Figure 9 that a number of variables need to be known to deduce the probability of success of the process (conjecture H). Firstly, the probability of the evidence itself being dependable is required. This may be elicited directly or it may come from its own daughter processes. This is P(E). Then the relevance or level of “sufficiency” of E1 and E2 to H, or P(H\E) is needed.

Figure 9: The contributions of two sub-processes E1 & E2 to conjecture H.

When there is more than one sub-process, the dependency or overlap between the two is needed so that common evidence is not counted twice. In the case of the total probability method of Hall et al. (1998), further judgements are required to specify the P(H\E) terms.

The necessary condition expressed in Figure 9 “E sufficient for H” comes out naturally as part of the total probability approach; if, for example, both E1 and E2 are necessary conditions, then:

where the value [0,0] represents the interval probability with 0 meaning no support for the conjecture and full support against, i.e., since E1 and E2 are necessary, anything not E1 and not E2 has no contribution to make.

The method implemented in the software has no way of expressing this, and so a heuristic has been introduced to express the weight of the necessary condition (Figure 9) as no P(H\E) terms are used. The significance of the heuristic in practice is to recognize strong evidence against the success of a process that may lead ultimately to the failure of the parent process.

The software algorithm uses the heuristic described above and only single probability numbers for the expressions of sufficiency and dependency and a Boolean operator for necessity. The advantage of this approach over other algorithms is that process models can be built and explored very quickly and have been found in practice to be powerful tools for illuminating the decision making process (Sillitto, 2015).

8.5 Testing the Outcomes through Feedback

The visualization of system effectiveness that can be derived from the Perimeta software is a snapshot of performance against valued processes and outcomes at any particular point in time. For this pilot, the process was not web based or automated, so the multi-academy trust leadership team were dependent on the research team providing the visual feedback and contributing to the interpretation of the discussions. However, leaders were able to interrogate the data from different perspectives and at differing levels of granularity.

In this hypothetical example, let’s say that the multi-academy trust leadership team wanted to know how girls in their academies experienced learning so that they could develop improvement aims. They could operate the dashboard at various levels of granularity — the whole cohort of schools, school by school, and by gender — focusing on the level 2 outcome, developing the learning of students so that they realize their full potential. Figure 10 presents the dashboard for the three academies with the overall results by academy presented using the Italian flag visualization in the top left-hand corner. This immediately suggests that female students in one academy have higher levels of positive experiences of learning than another (data was not provided for this strand of evidence from Academy 2). Equally for both academies was a degree of uncertainty, which is an area for further investigation. For Academy 3, there was a significant amount of certainty about what was not happening in terms of female students’ perceptions of learning. The bottom left quadrant of the dashboard shows each question asked for each student (layered) so that individual variation in responses could be interrogated. The summary chart for all students who provided data across the three academies is in the box to the right. An immediate interpretation is that some questions produced a negative result, and considerable uncertainty surrounds other questions.

Figure 10: Perimeta dashboard for the female population in three academies on strand 2

The dashboard enables a drilling down into the data to explore these indications further. One example of the actionable insights that could be derived from this process are provided in Table 2, showing the questions asked and the final score on the Italian flag for female students in the whole cohort. In this case, it is clear that female students might benefit from more opportunities to develop their leadership capabilities and have their achievements recognized. Drilling down into the male data in a similar way showed a different outcome with much uncertainty from the males about “feeling safe” in the academy.

9 DISCUSSION

The work described in this paper provides a proof of concept of the affordances of hierarchical process modelling and the use of the Perimeta software for educational leadership decision making. Specifically, we have indicated how this approach to decision making can generate a more balanced and holistic snapshot of a school’s performance for improvement planning. Initial analysis suggests that this approach is promising.

Perhaps the most significant “promise” is in the ability to link extended self-evaluation and ongoing improvement strategies with performance management and school improvement planning. Although the proof of concept exercise was not automated, and it was not taken up in practice beyond this project, its real value was in the opportunity to link meaningful professional learning with overall school improvement strategies in a way that moves beyond simple compliance with “external regulation” towards a locally devised and determined improvement process. The system made data available to teachers and leaders at all levels, requiring them to make decisions about how to improve on relevant processes and outcomes. By definition, this requires professional learning capability and data literacy on the part of staff. Crucially, this property of the approach enables context responsive local decision making rather than simply following an externally derived regulatory framework, or a pre-determined “textbook” solution.

The approach is rooted in a worldview that draws on complexity as the condition in which we operate and on integration as a practical epistemology when seeking to improve. It also assumes that the responsibility for improvement must be aligned with the authority to improve — if a system, such as a school, is to be sustainable then it has to be self-organizing; it cannot rely on external regulation. Important to building this data literacy is the development of technology to collect and model data in such a way that it can provide meaningful visual feedback to system leaders that connects to their experiential knowledge and commitments, as well as their need for evidence and provides signposts for improvement strategies. Linked to this capability is the practical need of improvement science to go beyond the use of “mean scores” and to drill down into the contextual differences embedded in the variations around the means and the interactions between variables in a real world context.

Using learning analytics for leadership decision making is critical for approaches to improvement that go beyond either “top down” school effectiveness approaches or “bottom up” school practice led improvement strategies. Such an approach is being developed successfully by Bryk and colleagues (Bryk & Gomez, 2008; Bryk, Gomez, & Grunow, 2011; Bryk, Sebring, Allensworth, Luppescu, & Easton, 2009) in the Carnegie Foundation for the Advancement of Teaching’s Improvement Research, located in Networked Improvement Communities.1

What is also interesting about this approach and the Perimeta modelling is that it acknowledges that, in engaging with human communities, there is a need to collect and integrate a fuller range of data than other approaches. In Habermasian terms (Joldersma & Deakin Crick, 2010) this approach is capable of integrating empirical analytical data, hermeneutical data (concerned with interpretation), and emancipatory data (concerned with the human need for fulfillment and freedom). Whilst standardized outcomes are important (empirical data) so too are stakeholder perceptions (hermeneutical data) and indeed emancipatory data — the extent to which stakeholders feel empowered to pursue their lifelong learning journeys. The latter was collected through the stories of transformation in strand 3.

9.1 Limitations of This Project

The intent of the work reported in this paper was to provide a proof of concept of the HPM approach to improvement for wider learning outcomes. As such, there are a number of limitations to the scope of the findings, not least of which is the fact that the data collection and input processes were not automated. In this case, each academy produced its own data, which was collated centrally then entered manually into the Perimeta model located on a device, rather than on the web. This single time factor renders the project useless in practice and therefore a primary concern has been to produce the automation necessary. This has included the creation of a “Surveys for Open Learning Analytics” platform2 capable of collecting questionnaire data across organisations and groups, providing rapid feedback to users whilst collating, storing, and exporting raw data as appropriate into knowledge structuring tools such as Perimeta. The second imperative is to have a web-based tool, such as Perimeta, so that stakeholders can access it in situ, and in time to benefit from any actionable insights.

A second issue was around quality assurance and the mapping of more traditional social science data onto the Italian Flag model. A great deal of time was spent analyzing and comparing the outputs of the different strands of evidence from the perspective of “traditional social science” and the “integrated approach” and to the extent allowed by the pilot project, we were satisfied that there was scope for some confidence and development.

A third major concern surrounded the usability of tools and dashboards. As with industry, educational leaders increasingly have to deal with rapidly changing, complex data and to use that data to improve and change. What is required of leaders it the capability to learn rapidly, on the job, to be agile and adaptive in constantly changing situations, and to be able to flex and change with the process. A significant professional learning requirement is built into an approach like this, which integrates research with practice and requires an unprecedented level of data literacy as well as a philosophical shift towards a participatory, integral worldview. Clearly for adoption of such an approach, organizational training and coordination would be required (for Perimeta and HPM in this case), as well as developing the organization as a learning organization.

Despite the limitations of this proof of concept, the findings were reported back to the trust leadership team, including the academy principals. It was welcomed as 1) an alternative data management approach that did not abandon necessary compliance with regulators, but equally valued the locally determined purposes and values of the community and 2) it provided useful information about performance (not reported here) in terms of leadership culture, curriculum development and student outcomes, and the learning of teachers and middle leaders.

Of course, the specific HPM identified in this paper, with its target constructs and measurements, may not be generalizable beyond the particular academy trust in which it was developed and deployed. However, the paper provides a proof of concept for the application of an HPM approach in building school improvement.

9.2 CONCLUSIONS

We have argued that the scope of learning analytics should include the “wider outcomes” that many educational institutions consider as central to their mission. These wider learning outcomes go beyond conventional academic grades in standardized tests, including both wider student learning outcomes (including “21st century learning” and organizational learning considerations (Buckingham Shum & Deakin Crick, 2016). This proof of concept with a leading multi-academy trust in the UK has gone some way to demonstrating the potential of this approach and its use to leaders. Future research and development should include 1) automating the process of both data collection and entry into a webbased Perimeta model, 2) research into the forms and types of data that can reliably report on the sorts of wider outcomes discussed in this study, and 3) the ways in which schools can organize themselves and their improvement processes to take advantage of these possibilities and make professional learning part of the everyday work of improvement.

The worldview paradigm underpinning this approach incorporates a complex systems perspective on organizational improvement, drawing attention to the inevitability of uncertainty in the evidence available for decision making and the need to provide analytics with actionable insights at multiple levels in the system as part of an ongoing approach to improvement.

We have shown how it is possible for a Leadership Decision Making Dashboard to provide actionable insights, potentially integrating organizational improvement and the professional learning of key stakeholders. In schools as complex systems, the flow of data is rapid and multifaceted. Thus the use of computational learning analytics to capture and represent complex data in context at meaningful levels of aggregation and abstraction is a promising field of enquiry because of the speed with which data can be fed back into learning loops and because complex data can be represented visually to provide leaders with “snapshots” of performance at any point in time. We have argued that it may support a paradigm shift from organizations that respond reactively to external regulations to proactive self-organizing systems capable of defining, measuring, and improving their own purposes. Thus, we argue that learning analytics should incorporate learning data for individuals, teams, and organizations and that when this can be aligned with organizational purposes and integrated with an improvement science approach, we push the boundaries of the system in which we consider learners to be embedded, and hence the set of variables considered as candidates for data aggregation and learning analytics.

REFERENCES

Assessment Reform Group. (1999). Assessment for learning: Beyond the black box. Cambridge, UK: University of Cambridge School of Education.

Assessment Reform Group. (2002). Testing, motivation and learning. Cambridge, UK: Assessment Reform Group.

Beer, S. (1984). The viable system model: Its provenance, development, methodology and pathology. Journal of the Operational Research Society, 35(1), 7–25. http://dx.doi.org/10.1057/jors.1984.2

Beer, S. (1985). Diagnosing the system for organisations. Hoboken, NJ: John Wiley.

Behrens, J., & DiCerbo, K. (2014). Harnessing the currents of the digital ocean. In J. Larussen & B. White (Eds.), Learning analytics: From research to practice. New York: Springer. http://dx.doi.org/10.1007/978-1-4614-3305-7_3

Blackwell, L. S., Trzesniewski, K. H., & Dweck, C. S. (2007). Implicit theories of intelligence predict achievement across an adolescent transition: A longitudinal study and an intervention. Child Development, 78(1), 246–263. http://dx.doi.org/10.1111/j.1467-8624.2007.00995.x

Blockley, D. (2010). The importance of being process. Civil Engineering and Environmental Systems, 27(3), 189–199. http://dx.doi.org/10.1080/10286608.2010.482658

Blockley, D., & Godfrey, P. (2000). Doing it differently: Systems for rethinking construction. London: Telford.

Bottery, M. (2004). The challenges of educational leadership. London: Paul Chapman.

Bower, D. F. (2004). Leadership and the self-organizing school. Paper presented at the Complexity Science and Educational Research Conference, Kingston, Ontario, Canada.

Bryk, A., & Gomez, L. (2008). Ruminations on Reinventing an R&D Capacity for Educational Improvement. Paper presented at the The Supply Side of School Reform and the Future of Educational Entrepreneurship, Stanford, CA.

Bryk, A. S., Sebring, P. B., Allensworth, E., Luppescu, S., & Easton, J. Q. (2009). Organizing schools for improvement: Lessons from Chicago: University of Chicago Press.

Bryk, A., Gomez, L. M., & Grunow, A. (2011). Getting ideas into action: Building networked improvement communities in education. In M. T. Hallinan (Ed.), Frontiers in Sociology of Education (pp. 127–162). Netherlands: Springer. http://dx.doi.org/10.1007/978-94-007-1576-9_7

Bryk, A., Gomez, L., Grunow, A., & LeMahieu, P. (2015). Learning to improve: How America’s schools can get better at getting better. Cambridge, MA: Harvard Educational Press.

Bryk, A., Sebring, P., Allensworth, E., Luppescu, S., & Easton, J. (2010). Organizing schools for improvement: Lessons from Chicago. Chicago, IL: University of Chicago Press.

Buckingham Shum, S., & Deakin Crick, R. (2012). Learning dispositions and transferable competencies: Pedagogy, modelling and learning analytics. Proceedings of the 2nd International Conference on Learning Analytics and Knowledge (LAK ’12), 29 April–2 May 2012, Vancouver, BC, Canada (pp. 92–101). New York: ACM. http://dx.doi.org/10.1145/2330601.2330629

Buckingham Shum, S., & Deakin Crick, R. (2016). Learning analytics for 21st century competencies. Journal of Learning Analytics and Knowledge, 3(2). http://dx.doi.org/10.18608/jla.2016.32.2

Campbell, J., DeBlois, P., & Oblinger, D. (2007). Academic analytics: A new tool for a new era. Educause Review, 42(4), 40–57.

Checkland, P. (1999). Systems thinking systems practice. Chichester, UK: John Wiley.

Checkland, P., & Scholes, J. (1999). Soft systems methodology in action. Chichester, UK: John Wiley.

Claxton, G. (2008). Expanding the capacity to learn: A new end for education? Opening Keynote Address, British Educational Research Association Annual Conference, 6 September 2006, Warwick University.

Cui, W., & Blockley, D. (1990). Interval probability theory for evidential support. International Journal of Intelligent Systems, 5(2), 183–192. http://dx.doi.org/10.1002/int.4550050204

Daniel, B. (2015). Big Data and analytics in higher education: Opportunities and challenges. British Journal of Educational Technology, 46(5), 904–920. http://dx.doi.org/10.1111/bjet.12230

Darling-Hammond, L., & Bransford, J. (2005). Preparing teachers for a changing world: What teachers should learn and be able to do. San Francisco, CA: Jossey-Bass.

Davis, J., MacDonald, A., & White, L. (2010). Problem-structuring methods and project management: an example of stakeholder involvement using Hierarchical Process Modelling methodology. Journal of the Operational Research Society,, 61, 893-904. http://dx.doi.org/10.1057/jors.2010.12

Davis, B., & Sumara, D. (2006). Complexity and education inquiries into learning, teaching and research. London: Routledge

Davis, J., & Fletcher, P. (2000). Managing assets under uncertainty. Society of Petroleum Engineers Asia Pacific Conference on Integrated Modelling for Asset Management (APCIMAM 2000), 25-26 April 2000, Yokohama, Japan.

Deakin Crick, R. (2017). Learning analytics: Layers, loops and processes in a virtual learning infrastructure. In G. Siemens & C. Lang (Eds.), Handbook of learning analytics & educational data mining. Society of Learning Analytics Research (SoLAR). http://dx.doi.org/10.18608/hla17.025

Deakin Crick, R., Barr, S., Green, H., & Pedder, D. (2015). Evaluating the wider outcomes of schools: Complex systems modelling. Educational Management Administration and Leadership, 45(4). http://dx.doi.org/10.1177/1741143215597233

Deakin Crick, R., Huang, S., Ahmed Shafi, A., & Goldspink, C. (2015). Developing resilient agency in learning: The internal structure of learning power. British Journal of Educational Studies, 63(2), 121–160. http://dx.doi.org/10.1080/00071005.2015.1006574

Dempster, A. (1969). Elements of continuous multivariate analysis. Reading, MA: Addison-Wesley.

Delors, J. (2000). Learning: The treasure within. http://www.teacherswithoutborders.org/pdf/Delors.pdf

Dweck, C. S. (2000). Self-theories: Their role in motivation, personality, and development. New York: Psychology Press.

European Commission. (2007). Key competences for lifelong learning: European reference framework. Luxembourg: Publications Office of the European Union.

Ferguson, R. (2012). Learning analytics: Drivers, developments and challenges. International Journal of Technology Enhanced Learning, 4(5/6), 304–317. https://doi.org/10.1504/IJTEL.2012.051816

Ferguson, R., Clow, D., Macfadyen, L., Essa, A., Dawson, S., & Alexander, S. (2014). Setting learning analytics in context: Overcoming the barriers to large-scale adoption. Proceedings of the 4th International Conference on Learning Analytics and Knowledge (LAK ’14), 24–28 March 2014, Indianapolis, IN, USA (pp. 251–253). New York: ACM. http://dx.doi.org/10.1145/2567574.2567592

Goldspink, C. (2015). Communities making a difference national partnerships SA teaching for effective learning pedagogy research project 2010–2013. Adelaide: South Australia Department for Education and Child Development.

Goldspink, C., & Foster, M. (2013). A conceptual model and set of instruments for measuring student engagement in learning. Cambridge Journal of Education, 43(3), 291–311. http://dx.doi.org/10.1080/0305764x.2013.776513

Gunter, H. (2001). Leaders and leadership in education. London: Paul Chapman.

Hall, J., Le Masurier, J., Baker-Langman, E., Davis, J., & Taylor, C. (2004). Decision-support methodology for performance-based asset management. Journal of Civil Engineering and Environmental Systems, 21, 51–75. http://dx.doi.org/10.1080/1028660031000135086

Hall, J., Blockley, D., & Davis, J. (1998). Uncertain inference using interval probability theory. International Journal of Approximate Reasoning, 19, 247–264. https://dx.doi.org/10.1016/S0888-613X(98)10010-5

Harlen, W., & Deakin Crick, R. (2003). Testing and motivation for learning. Assessment in Education, 10(2), 169–207. http://dx.doi.org/10.1080/0969594032000121270

James, M., & Gipps, C. (1998). Broadening the basis of assessment to prevent the narrowing of learning. The Curriculum Journal, 9(3), 285–297. http://dx.doi.org/10.1080/0958517970090303

James, M., McCormick, R., Black, P., Carmichael, P., Drummond, M., Fox, A., Wiliam, D. (2007). Improving learning how to learn: Classrooms, schools and networks. London: Routledge.

Joldersma, C., & Deakin Crick, R. (2010). Citizenship, discourse ethics and an emancipatory model of lifelong learning. London: Routledge.

Jackson, M. C. (2000). Systems approaches to management. London: Kluwer Academic.

Knight, S., Buckingham Shum, S., & Littleton, K. (2014). Epistemology, assessment, pedagogy: Where learning meets analytics in the middle space. Journal of Learning Analytics, 1(2), 23–47. http://dx.doi.org/10.18608/jla.2014.12.3

Norris, D., Baer, L., & Offerman, M. (2009). A national agenda for action analytics, White Paper. National Symposium on Action Analytics, 21–23 September 2009, St. Paul, Minnesota, USA.

MacBeath, J., & Cheng, Y. (2008). Leadership for learning: International perspectives. London: Routledge.

MacBeath, J., & McGlynn, A. (2002). Self-evaluation: What’s in it for schools? London: RoutledgeFalmer.

Mason, M. (Ed.). (2008). Complexity theory and the philosophy of education. Chichester, UK: Wiley- Blackwell.

McCombs, B., & Miller, L. (2008). A school leader’s guide to creating learner-centered education: From complexity to simplicity. New York: Corwin Press.

McCombs, B. L., Daniels, D. H., & Perry, K. E. (2008). Children’s and teachers’ perceptions of learnercentered practices, and student motivation: Implications for early schooling. The Elementary School Journal, 109(1), 16–35. https://dx.doi.org/10.1086/592365

Macfadyen, L., Dawson, S., Abelardo, P., & Gašević, D. (2014). Embracing big data in complex educational systems: The learning analytics imperative and the policy challenge. Research & Practice in Assessment, 9, 17–28. http://www.rpajournal.com/dev/wpcontent/uploads/2014/10/A2.pdf

McKinsey Report. (2007). How the world’s best performing school systems come out on top. http://www.smhc-cpre.org/wp-content/uploads/2008/07/how-the-worlds-best-performingschool- systems-come-out-on-top-sept-072.pdf

OECD. (2001). Investing in competencies for all. Meeting of OECD Education Ministers. Retrieved from http://www.oecd.org/innovation/research/1870557.pdf

Opfer, V., & Pedder, D. (2011). The lost promise of teacher professional development in England. European Journal of Teacher Education, 34(1), 3–24. http://dx.doi.org/10.1080/02619768.2010.534131

Pedder, D. (2006). Organizational conditions that foster successful classroom promotion of learning how to learn. Research Papers in Education, 21(2), 171–200. http://dx.doi.org/10.1080/02671520600615687

Pedder, D. (2007). Profiling teachers’ professional learning practices and values: Differences between and within schools. The Curriculum Journal, 18(3), 231–252. http://dx.doi.org/10.1080/09585170701589801

Pedder, D., James, M., & MacBeath, J. (2005). How teachers value and practise professional learning. Research Papers in Education, 20(3), 209–243. http://dx.doi.org/10.1080/02671520500192985

Piety, P. J., Hickey, D. T., & Bishop, M. J. (2014). Educational data sciences: Framing emergent practices for analytics of learning, organizations, and systems. Proceedings of the 4th International Conference on Learning Analytics and Knowledge (LAK ’14), 24–28 March 2014, Indianapolis, IN, USA (pp. 193–202). New York: ACM. http://dx.doi.org/10.1145/2567574.2567582

Reay, D. W. D. (1999). ‘I’ll be a nothing’: Structure, agency and the construction of identity through assessment. British Educational Research Journal, 25(3), 343–354. http://dx.doi.org/10.1080/0141192990250305

Ritchie, R., & Deakin Crick, R. (2007). Distributing leadership for personalising learning. London: Continuum.

Robinson, V., Lloyd, C., & Rowe, K. (2009). The impact of leadership on student outcomes: An analysis of the differential effects of leadership types. Educational Administration Quarterly, 45(3), 515–520. http://dx.doi.org/10.1177/0013161x09335140

Rychen, D., & Salagnik, L. (2001). Definition and selection of key competencies. Theoretical and Conceptual Foundations (DeSeCo) Background paper, Swiss Federal Statistical Office, OECD, ESSI, Neuchatel.

Selvin, A., & Buckingham Shum, S. (2014). Constructing knowledge art: An experiential perspective on crafting participatory representations. Williston, VT: Morgan & Claypool.

Shafer, G. (1976). A mathematical theory of evidence. Princeton, NJ: Princeton University Press.

Silins, H., & Mulford, B. (2004). Schools as learning organisations: Effects on teacher leadership and student outcomes. School Effectiveness and School Improvement, 15, 443–466. http://dx.doi.org/10.1080/09243450512331383272

Sillitto, H. (2015). Architecting systems: Concepts, principles and practice. London: College Publications Systems Series.

Snowden, D., & Boone, M. E. (2007, November). A leader’s framework for decision making. Harvard Business Review. https://hbr.org/2007/11/a-leaders-framework-for-decision-making

Thomas, D., & Seely Brown, J. (2009). Learning for a world of constant change: Homo sapiens, Homo faber & Homo ludens revisited. Paper presented at the 7th Glion Colloquium, June 2009, University of Southern California. http://www.johnseelybrown.com/Learning%20for%20a%20World%20of%20Constant%20Change.pdf

Thomas, D., & Seely Brown, J. (2011). A new culture of learning cultivating the imagination for a world of constant change. CreateSpace Independent Publishing Platform.

West-Burnham, J. (2005). Leadership for personalizing learning. In S. De Frietas & C. Yapp (Eds.), Personalizing learning in the 21st century. Stafford, UK: Network Educational Press.

White, L. 2006. Evaluating problem-structuring methods: Developing an approach to show the value and effectiveness of PSMs. Journal of the Operational Research Society, 57, 842–845. http://dx.doi.org/10.1057/palgrave.jors.2602149

Yeager, D., Bryk, A., Muhich, J., Hausman, H., & Morales, L. (2015). Practical measurement. San Francisco, CA: Carnegie Foundation for the Advancement of Teaching.

Zohar, D. (1997). Rewiring the corporate brain. San Francisco, CA: Berrett-Koehler Publishers.

_________________________